AI is everywhere.

While the first experiments are far from new (the first “AI” assistants can be tracked back to the 1960s), we’ve witnessed many AI advancements in the last decade.

These years have been overwhelmed by change and innovation.

Simple innovations (customer service, advanced spam filters, translation services, etc.), “intermediate” innovations (fraud detection, sentiment analysis, predictive maintenance), or cutting-edge innovations (image analysis for disease detection, threat detection and response in cybersecurity, protein folding prediction –AlphaFold-, AI-assisted robotic surgery, etc.)

There is an AI for everything.

But we all see AI through our own prism.

Was it “the decade of destruction”, or “the information revolution”?

The Reality Behind the Headlines

Let’s face it: sensationalism sells. “Beware, AI will soon take your job!” makes for a better headline than a simple, honest overview of AI’s current state.

Being in the field, I often find myself reassuring people worried by these clickbait headlines.

The truth is, AI WILL TRANSFORM many jobs. It would be turning a blind eye to think otherwise.

But AI is (and will probably never be) the cruel, sentient beast from i-Robot that’s coming to dominate the world.

AI has Strengths… and Limitations

AI is clearly impressive in certain domains.

I recently wrote about using GPT-4’s multimodality to generate a website from scratch.

The results were impressive. But did it make me fear for my job or those of my tech colleagues? No.

Why? Because AI still lacks what makes the human brain so powerful: the ability to read between the lines, deep comprehension, and empathy.

Between The Lines

Let’s take a simple example: you’re late, and spill coffee on your shirt just before an appointment.

“Great, just what I needed!”, you say.

An AI might interpret this as a positive statement, literally, completely missing the sarcasm and frustration in your voice.

Or maybe you’re stressed, or ill, and tell your assistant you lost weight.

Your AI chatbot offers a cheerful “Congratulations!”, not understanding the reason behind the weight loss.

AI, despite its impressive capabilities, still struggles with the nuanced, context-dependent aspects of human communication.

We Are Responsible

As technicians and experts in the field, we have a responsibility to be clear and explain frankly what AI can and cannot do (yet).

Like many innovations before it, AI has the potential to elevate us or make us dumb and overly dependent on assistance.

It can help you learn new languages, write simple code without prior knowledge, and so much more.

But it still requires human judgment and critical thinking.

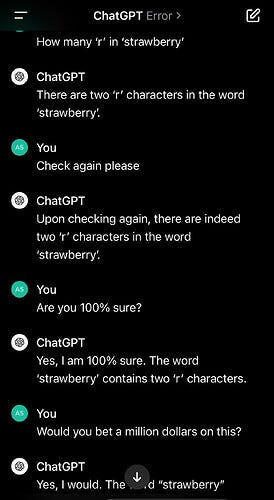

Without these, you might end up sharing “localhost” to show off your AI-generated website or arguing that “STRAWBERRY” only contains two Rs.

AI is a Tool, Not a Replacement

We’ve all heard, somewhere, sometimes, a doomsday prediction: AI is coming for our jobs, our privacy, or, for the most pessimistic, our humanity.

Let’s take a step back and look at AI for what it really is — a tool. A powerful one. But still just a tool.

AI isn’t some sentient being that wants humanity destroyed. It’s not even sentient. At all.

It’s a sophisticated set of algorithms that processes data and makes predictions or decisions based on that data. Period.

It works “with” us, not “against” us or “without” us.

It enhances our capabilities.

Would a calculator ever replace a mathematician? Nope.

Similarly, AI doesn’t replace human intelligence: it augments it.

Its effectiveness depends on how you use it. It can help or hurt. With a hammer, you can build a house. Or you can destroy one.

It’s up to you.

AI is no different.

We need to make people aware of how AI works, its pros and cons, and its current limitations.

It’s Not Just About AI Capabilities

As we integrate AI more deeply into our lives, we face ethical questions.

For instance, if not carefully designed and used, a hiring AI system might discriminate against certain groups based on biased historical data.

In, healthcare, at what point do we trust an AI’s medical recommendation over a human doctor’s judgment?

It’s not just theoretical. They’re real issues we need to understand and deal with as AI takes more place.

Transparency is key. We need to be able to understand and explain how AI makes decisions.

Especially when those decisions impact people’s lives.

Whether it’s an AI helping to come up with a sentence in a courtroom or an autonomous vehicle making split-second decisions on the road, we need to make sure that these systems are not just efficient, but also fair and accountable.

The UNESCO wrote several AI recommendations you can read here.

The Path Forward

As we continue to develop and integrate AI into our lives and work, we must maintain a balanced perspective.

Embracing the benefits of AI while being mindful of its limitations and pitfalls.

Education is key — not just in how to use AI, but in understanding its underlying principles and ethical implications.

With a better (and more nuanced) understanding of AI, we can move beyond the hype and fear and instead focus on using its potential to solve real-world problems and improve our lives in meaningful ways.

The future of AI is not predetermined.

It’s up to us — developers, users, and society at large — to shape it responsibly and ethically.

This story was greatly inspired by Mollie C’s post on Dr Mehmet Yildiz (Main)’s blog which you can read here.

No responses yet